| Version 8 (modified by binoy, 56 years ago) (diff) |

|---|

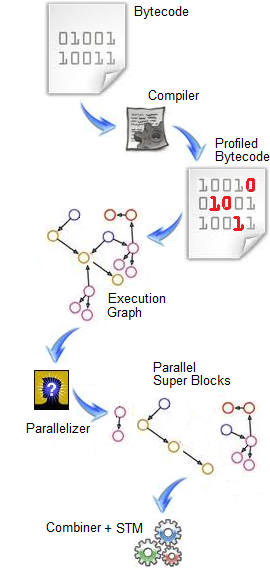

How it works?

HydraVM works in four phases. First, the HydraVM accepts program bytecode and converts it to architecture-specific machine code. We consider the program as a set of basic blocks, where each basic block is a sequence of non-branching instructions that ends either with a branch instruction (conditional or non-conditional) or a return. Thus, any program can be represented by a graph in which nodes represent basic blocks and edges represent the program control flow - i.e., an execution graph. Basic blocks can be determined at compile-time. However, our main goal is to determine the frequency of reachability of the basic blocks. To collect this information, we modify the baseline compiler of Jikes RVM to plugin additional instructions (into the bytecode) at the edges of the basic blocks that detect whenever a basic block is reached. Additionally, we plugin instructions into the bytecode to: 1) statically detect the set of variables accessed by the basic blocks, and 2) mark basic blocks with input/output operations, as they may need special handling in program reconstruction. This code modification doesn't affect the behavior of the original program. We call this version of the modified program, profiled bytecode.

HydraVM works in four phases. First, the HydraVM accepts program bytecode and converts it to architecture-specific machine code. We consider the program as a set of basic blocks, where each basic block is a sequence of non-branching instructions that ends either with a branch instruction (conditional or non-conditional) or a return. Thus, any program can be represented by a graph in which nodes represent basic blocks and edges represent the program control flow - i.e., an execution graph. Basic blocks can be determined at compile-time. However, our main goal is to determine the frequency of reachability of the basic blocks. To collect this information, we modify the baseline compiler of Jikes RVM to plugin additional instructions (into the bytecode) at the edges of the basic blocks that detect whenever a basic block is reached. Additionally, we plugin instructions into the bytecode to: 1) statically detect the set of variables accessed by the basic blocks, and 2) mark basic blocks with input/output operations, as they may need special handling in program reconstruction. This code modification doesn't affect the behavior of the original program. We call this version of the modified program, profiled bytecode.

With the profiled bytecode, we can construct the program execution graph that shows basic blocks and variables as nodes and the execution flow as a weighted edge where weight denotes the frequency with which the target block is reached. The benefits of execution graph are multifold:

- Hot-spot portions of the code can be identified by examining the hot paths of the graph.

- Static data dependencies between blocks can be determined.

- Parallel execution patterns of the program can be identified.

We then develop a variant of the execution graph, called dynamic execution graph, which captures the relationship between the basic block and itself in the future. If a basic block is accessed more than once during the lifetime of the program, then it appears as different blocks in the dynamic execution graph. This graph is useful for detecting the dependencies between loop iterations.

The third phase is the reconstruction of the program. In this phase, we divide the code into, hopefully, independent parts named super blocks that represent subsets of the execution graph. Every super block doesn't overlap with other super blocks in accessed variables, and represents a long sequence of instructions, including branch statements, that commonly execute in this pattern. As a branch instruction has taken and not taken paths, the super block may contain one or both of the two paths according to the frequency of using these paths. For example, in biased branches, one of the paths is often considered so it is included in the super block, leaving the other path outside the super block. On the other hand, in unbiased branches, both paths may be included in the super block. Therefore, a super block has multiple exits, according to the program control flow during its execution. A super block also has multiple entries, as a jump or branch instruction may target one of the basic blocks that constructs it. The parallelizer module, orchestrates the construction of super blocks and distributes them over parallel threads. However, this may potentially lead to out-of-order execution of the code (which we address in the next phase). I/O instructions are excluded from super blocks, as changing their execution order affects the program semantics.

Finally, we combine and link super blocks according to their exits, to form the same behavior as that of the original program. This phase must preserve data consistency and program order, because in the execution graph construction, we don't use any pointer tracking technique to avoid aliasing. Also, memory arithmetic (e.g., array indexed with variables) may easily violate this program reconstruction. Thus, to ensure data consistency, we employ software transactional memory?, where each super block executing as a thread represents a transaction. Memory access violations are now detected and resolved by STM through transactional conflict detection, abort, roll-back, and retry. Additionally, program order is maintained by deferring the commit of transactions that complete early till their valid execution time.